NVIDIA A100

NVIDIA A100: The Ideal AI & High-Performance Computing GPU

The NVIDIA A100 is a core GPU engineered mainly for high-performance computing (HPC), artificial intelligence (AI) and other workloads. It is generally utilized in cloud computing, enterprise-level apps, and global data centers. Developed on the basis of Ampere architecture, the this GPU offers exceptional performance, flexibility, and productivity for AI and complex computing workloads.

In this guide, we’ll cover everything about the NVIDIA A100 GPU, its hardware specs, price, and how it simply compares to all other cutting-edge GPUs, including the RTX 4090, Huawei Ascend 910C, and NVIDIA H100. We’ll also talk about why GPU4HOST is one of the best platforms for this cloud hosting.

About the NVIDIA A100 GPU

The NVIDIA A100 was launched as part of NVIDIA’s Ampere-based data center GPU lineup. It is developed to manage AI model training, deep learning, inferencing, and high-performance computing tasks with high productivity. The Tensor Core technology in the A100 offers rapid performance gains over past generations.

Key Characteristics of NVIDIA A100

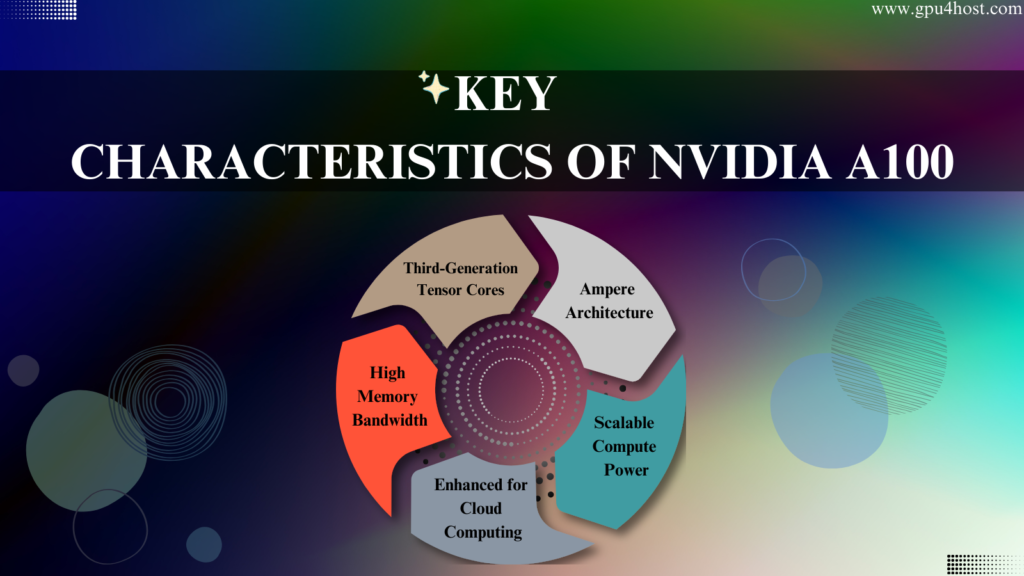

- Third-Generation Tensor Cores – Accelerates AI-based tasks, which makes it a perfect choice for deep learning model inference and training.

- High Memory Bandwidth – Almost 2 TB/s memory bandwidth, offering seamless performance for data-based apps.

- Ampere Architecture – Provides multi-instance GPU (MIG) support, enabling different users to share some specific GPU assets.

- Enhanced for Cloud Computing – Accessible with the help of different cloud platforms such as GPU4HOST, reducing the requirement for costly hardware investments.

- Scalable Compute Power – Can be divided into different GPU instances, boosting productivity.

NVIDIA A100 Hardware Specifications (HW Specs)

Here’s a thorough look at the NVIDIA A100 40GB & 80GB hardware specifications:

| Specification | 40GB | 80GB |

| Architecture | Ampere | Ampere |

| GPU Memory | 40GB HBM2 | 80GB HBM2e |

| Tensor Cores | 432 | 432 |

| CUDA Cores | 6912 | 6912 |

| PCle Version | PCle 4.0 | PCle 4.0 |

| TDP | 400W | 400W |

| Memory Bandwidth | 1.6 TB/s | 2 TB/s |

NVIDIA A100 Price & Accessibility

The NVIDIA A100 price changes relying on the memory configuration (40GB or 80GB) and seller.

- 40GB Price– Generally, starts from $10,000 to $12,000.

- 80GB Price– Usually, goes up to $15,000 to $20,000.

However, for all those organizations opting to use A100 GPU without high setup costs, GPU4HOST offers budget-friendly A100 hosting services.

NVIDIA A100 vs. Several Other Advanced GPUs

A100 vs. H100

The NVIDIA H100 is the replacement for the A100, having an enhanced Hopper architecture.

| Features | A100 | H100 |

| Architecture | Ampere | Hopper |

| Performance | Excellent | Superior |

| Tensor Cores | 432 | 528 |

| CUDA Cores | 6912 | 16896 |

| Memory | 40GB/80GB | 80GB |

Opinion: The H100 is a lot more robust, but the NVIDIA A100 is still a budget-friendly solution for AI tasks.

A100 vs. RTX 4090

| Characteristics | RTX 4090 | A100 |

| Architecture | Ada Lovelace | Ampere |

| CUDA Cores | 16384 | 6912 |

| Tensor Cores | 512 | 432 |

| Memory | 24GB GDDR6X | 40GB/80GB HBM2e |

Opinion: The A100 outshines artificial intelligence and high-performance computing workloads, while the RTX 4090 is a much more reliable option for playing gaming and artistic-related tasks.

Huawei Ascend 910C vs. NVIDIA A100

Huawei’s Ascend 910C is basically an AI accelerator that rivals NVIDIA’s A100 in the case of deep learning.

| Features | A100 | Huawei Ascend 910C |

| AI Performance | 312 TFLOPS FP16 | 256 TFLOPS FP16 |

| Ecosystem | Extensive | Limited |

| Power Efficiency | Lower | Higher |

Opinion: NVIDIA A100 has very reliable software support and ecosystem addition, which makes it more practical as compared to Huawei Ascend 910C.

Some Other Popular NVIDIA GPUs for Cloud Computing & AI

NVIDIA provides different types of GPUs ideal for artificial intelligence, machine learning, cloud computing, and many more. Here are several essential options:

Quadro RTX A4000

The RTX A4000 is an advanced GPU developed especially for cutting-edge AI and design-related tasks. Its features are:

- 16GB GDDR6 memory, offering robust performance for a variety of tasks.

- Architecture based on Ampere, guaranteeing high-energy computing.

- Enhanced for deep learning, CAD, and 3D modeling development.

NVIDIA A40

The NVIDIA A40 is a robust GPU engineered for performing 3D graphic rendering, visual computing, and AI-based tasks. Main specifications are:

- Tensor Cores for AI boost.

- 48GB GDDR6 memory for managing huge and complex datasets.

- Perfect for enterprise-grade AI, expert visualization, and virtual workstations.

Both of these above-mentioned GPUs offer superior performance for AI experts, researchers, and organizations, making them a perfect choice for AI-based tasks in the case of cloud computing.

Reasons to Choose GPU4HOST for this Cloud Hosting

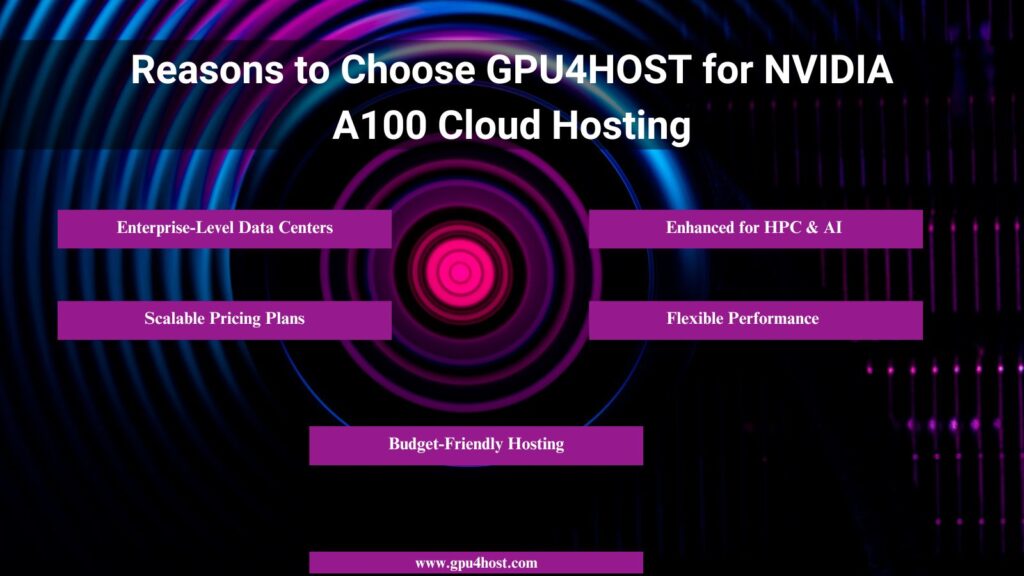

If you want the power of the NVIDIA A100 without heavily investing in costly hardware, GPU4HOST offers the reliable solution:

- Enterprise-Level Data Centers – Our powerful infrastructure guarantees a 99.99% high uptime, minimum redundancy, and protected environments for AI-based tasks.

- Enhanced for HPC & AI – Our cutting-edge cloud environment is built for AI model training, machine learning, deep learning, and high-level scientific computations.

- Scalable Pricing Plans – Select from hourly, monthly, or on-demand plans customized according to your business needs.

- Flexible Performance – Simply scale GPU resources according to your growing computing requirements.

- Budget-Friendly Hosting – Get enterprise-level A100 hosting under your budget, removing all the unnecessary or hidden costs.

With the help of GPU4HOST, you get high reliability, scalability, and optimal performance for your HPC, and deep learning workloads.

Harness the complete power of deep learning and artificial intelligence with GPU4HOST servers—where high power, scalability, and optimal performance come together to boost your business success.

Conclusion

The A100 becomes an ideal GPU for a variety of tasks like AI, high-performance computing (HPC), and a lot more. It provides high-end performance, flexibility, and productivity, which makes it a perfect option for top-tier data centers, cloud AI tasks, etc. Regardless of the latest GPUs, the H100 still holds its power as a budget-friendly, and high-performance GPU for AI experts.

For all those who want this power without the hefty maintenance costs, GPU4HOST offers affordable cloud hosting services. With the help of flexible, powerful infrastructure, GPU4HOST guarantees seamless and productive AI, high-performance computing, and machine learning deployments under budget.

Are you opting for reliable NVIDIA A100 hosting? Start your journey with GPU4HOST now!

FAQ

- Can NVIDIA A100 be used for gaming?Is the NVIDIA A100 good for gaming?

This is not enhanced for gaming. It is engineered for artificial intelligence, ML, and HPC tasks.

- What is the NVIDIA A100?

It is basically a data center GPU engineered for AI and all other high-level tasks.

- When was NVIDIA A100 released?

The A100 was officially launched in May 2020.

- How many cores does the NVIDIA A100 have?

The A100 GPU has a total of 6,912 CUDA cores.

- How much does an NVIDIA A100 cost?

The NVIDIA A100 almost costs between $10,000 and $20,000, basically relying on the memory configuration.

- What is NVIDIA A100 used for?

It is mainly utilized for AI model training, deep learning models, complex simulations, and some other advanced computing workloads.

- Why is the NVIDIA A100 so expensive?

The NVIDIA A100 is costly just because of its cutting-edge architecture, huge memory bandwidth, and expertise in artificial intelligence and HPC, making it a reliable solution for experts and organizations.

- What is NVIDIA DGX A100?

The NVIDIA DGX A100 is a foundation that includes different A100 GPUs, built for AI and research.

- Is the NVIDIA A100 better than the 4090?

The NVIDIA A100 is a more perfect choice for deep learning, AI, and high-performance computing tasks, while the RTX 4090 is appropriate for gaming and other creative activities.

- Where to buy the NVIDIA A100?

The NVIDIA A100 can be easily purchased from NVIDIA’s official website, certified vendors, and cloud platforms such as GPU4HOST.