About NVIDIA NIM: Streamlining AI Infrastructure Efficiently

At present, AI remains to change almost all industries, and NVIDIA stays in the lead of innovation with its advanced software and hardware solutions. The latest innovation of NVIDIA is the NVIDIA NIM, a robust management platform that transforms the way businesses interact with AI workflows and NVIDIA hardware. With the help of this guide, we will understand what NVIDIA NIM is, its functioning, and its advantages for AI-determined enterprises. We will also cover how you can utilize this platform successfully and the role of GPU4HOST in offering the important infrastructure to run NVIDIA solutions reliably.

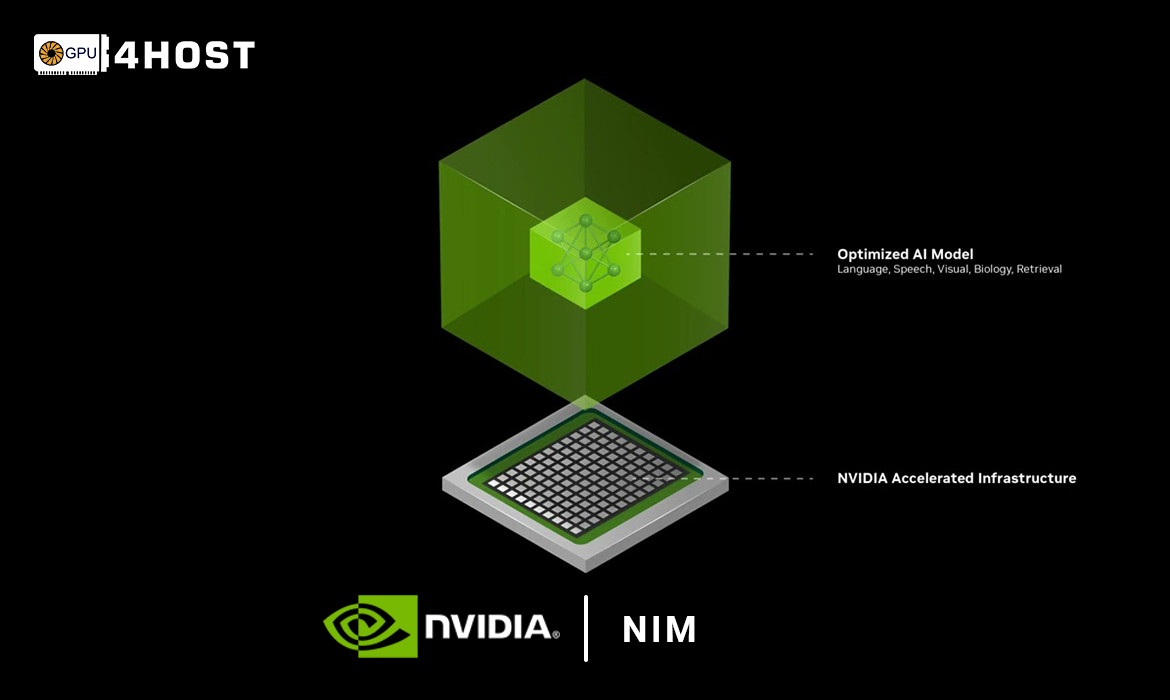

What is NVIDIA NIM?

NVIDIA NIM stands for NVIDIA Infrastructure Manager and is a robust platform built to handle AI infrastructure’s ecosystem, including GPU Servers, software frameworks, and AI chips. It allows businesses to handle their NVIDIA AI workloads, enhance resource allocation, and automate system setups for optimized performance. With AI models increasing robustness and the requirement of infrastructure to support them, NIM works as a hub for supervising AI model deployment, guaranteeing seamless working and flexible usage of assets.

NIM was specifically designed to address all the increasing demands of AI technologies. As organizations continue to develop more advanced neural networks and AI-based systems, handling all these types of systems productively becomes a very big challenge. This is the case where NVIDIA NIM comes into focus, streamlining the management of NVIDIA software and hardware while guaranteeing that all assets are used productively.

Working of NVIDIA NIM

NVIDIA NIM works as a management interface among both NVIDIA hardware—such as their well-known GPU servers and AI chips—and the software that is utilized to run AI-based models. The platform unites with cloud-based systems, on-site data centers, and hybrid infrastructures, providing reliability for numerous AI workloads. Here are points that help you understand how NIM works:

Customization & Automation

The robust feature of NVIDIA NIM is its proficiency to automate all repetitive tasks. Whether it’s equipping new assets for AI model training or levelling up infrastructure for growing workloads, the automation capabilities of NIM decrease the requirement for manual intervention. Additionally, the NVIDIA NIM API gives permission for proper personalization of resource allocation, allowing organisations to customise the platform according to their unique business needs.

NVIDIA NIM API

The NVIDIA NIM API always plays a very important part in the case of automating and managing infrastructure-related tasks. With the help of this API, programmers and IT administrators can easily control allocation of resources, configure automated procedures for deploying AI models, and keep an eye on system performance. The API enables robust integration into previous DevOps pipelines, making it simpler to apply NVIDIA’s hardware into huge enterprise workflows.

Enhanced Resource Allocation

With the increasing complexity of AI models, successful allocation of resources becomes crucial. NVIDIA NIM utilizes exceptional algorithms to enhance how resources, like AI chips and GPUs, are allocated to different tasks. It makes sure that no hardware is wasted, decreasing interruptions in the processing of vast datasets or training AI models.

Smooth Integration with AI Pipelines

The NVIDIA NIM API enables smooth integration with current AI pipelines. This states that infrastructure management is not a distinct task, but it is an integral part of the AI deployment and development procedure. Developers can simply include infrastructure automation into their dedicated DevOps workflows, boosting AI model deployment.

Centralized Control & Monitoring

NVIDIA NIM offers users a specific interface to both control and monitor all NVIDIA-accelerated resources. Even though you are simply running a local GPU cluster or using cloud services, NIM easily carries out all your AI resources under the same roof. This makes it a lot easier for businesses to track the usage of GPUs, check performance, and enhance workflows according to real-time data.

Advantages of Using NVIDIA NIM

NVIDIA NIM offers various advantages for organizations utilizing NVIDIA hardware and AI-based solutions. Here are several crucial benefits:

Improved Scalability

As AI workloads increase, so does the requirement of scalable infrastructure. NVIDIA NIM makes it a lot easier to boost resources up or down according to the demand of workload. This scalability guarantees that organizations can handle growth successfully without additionally investing in unwanted hardware resources.

Streamlined AI Infrastructure Management

By providing complete control over NVIDIA assets, NIM streamlines the management of all AI infrastructures. This is mainly crucial for businesses with hybrid environments that want to balance heavy workloads across both on-site and cloud systems.

Use Cases for NVIDIA NIM

NVIDIA NIM is resourceful and can be easily applied across numerous industries and AI-based workloads. Various significance use cases consist of:

Cloud-Based AI Solutions

With its proficiency to incorporate with cloud infrastructures, NIM is the best option for organizations providing NVIDIA AI solutions as the critical part of their cloud services. It permits all cloud service providers to handle NVIDIA-powered servers while making sure that the clients get the performance they want.

AI Research & Development

Researchers are continuously working on complex AI models and want access to huge computational power. NVIDIA NIM lets them handle all these resources successfully, ensuring that their AI models are well-trained as rapidly and precisely as possible.

How GPU4HOST Optimize Your NVIDIA NIM Experience

To get the advantage from NVIDIA NIM’s proficiencies, you need solid hardware infrastructure. This is the case where GPU4HOST works outstandingly. Expert in offering high-performance GPU servers, GPU4HOST always ensures that your models have sufficient computational power that they want to succeed. It doesn’t matter if you’re working with NVDA AI chips, deploying them in the cloud, or scaling AI models; GPU4HOST provides the infrastructure that matches outstandingly with NVIDIA NIM. With GPU servers of GPU4HOST, all businesses can smoothly incorporate NVIDIA NIM into their AI models, ensuring full accessibility, and reliability for their AI-determined applications.

Conclusion

NVIDIA is a completely game-changing platform that streamlines the management of NVIDIA’s robust AI model. NIM always provides the tools that you want to succeed. By using the NVIDIA API, organizations can easily automate and tailor their asset management, making AI models more productive and reliable. When partnered with GPU servers of GPU4HOST, you have the powerful setup for boosting NVIDIA NIM’s potential in the case of any AI-driven enterprise.